Natural Language Interface for Controlling Robotic Systems

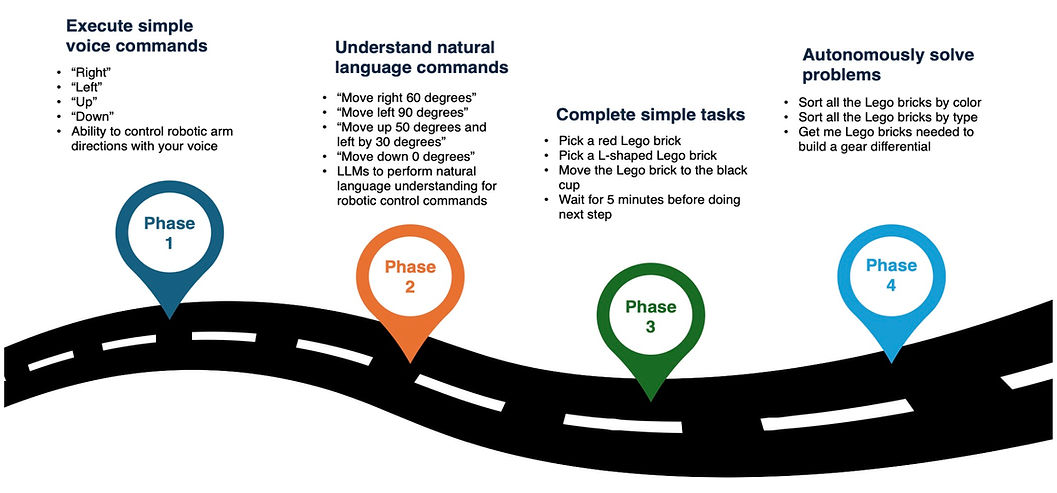

I’m developing a natural language interface for robotic arm control at the CMU Robotics Institute under Professor Chris Atkeson, as part of a broader initiative to make human-robot interaction more intuitive and accessible. The goal is to allow users to command robots using everyday speech, reducing the barrier to interaction for non-expert users and enabling seamless integration in assistive and home environments.

The current system combines Vosk for real-time speech recognition with the Mistral large language model to parse spoken commands (e.g., “move right 45° and up 60°”) and translate them into robotic actions. The prototype supports robust voice-to-action execution, and the next phase will focus on enabling high-level task understanding—allowing the robot to interpret open-ended instructions (e.g., “sort the LEGO bricks by color”) and autonomously plan and execute the appropriate sequence of actions.

Aim: To create a natural language interface that makes human-robot interaction intuitive and accessible, enabling robots to understand everyday speech and autonomously execute tasks.

-

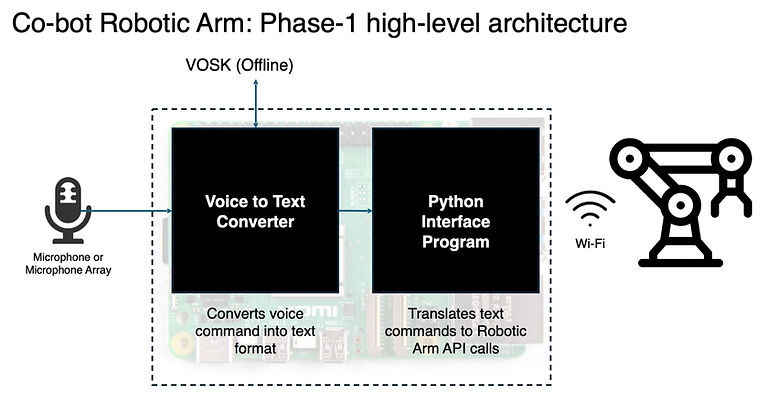

Used Vosk (offline speech-to-text) to listen to my voice

-

Recognized exact words like “right,” “left,” “up", "down"

-

Predefined mappings: “Right” → Move joint 1 negative by 30 degrees

-

Sent fixed JSON commands directly over Wi-Fi to the RoArm robot

-

We use Vosk to listen and transcribe voice offline

-

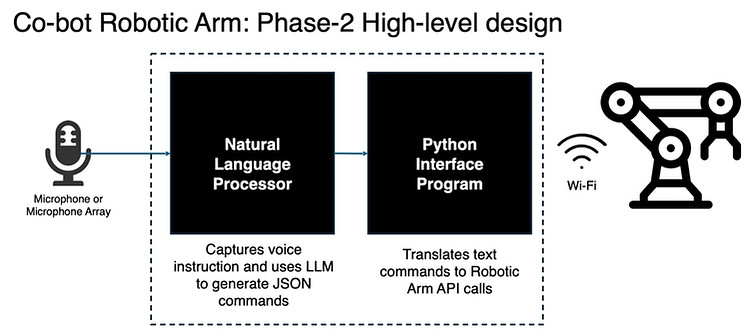

Mistral AI model takes my sentence (like “move left by 30 degrees”)

-

The model outputs a pure JSON format: joint number, direction, degrees

-

Example output:{ "joint": 1, "direction": "left", "degrees": 30 }

-

Code translates the AI’s JSON into proper movement commands

Phase 2 - Videos

Single Command: Robot executes only one movement per sentence.

Multi Command: executes multiple movements sequentially